Wednesday, August 5, 2009

apache restart error - Starting httpd: (98)Address already in use

Starting httpd: (98)Address already in use: make_sock: could not bind to address [::]:80

(98)Address already in use: make_sock: could not bind to address 0.0.0.0:80

no listening sockets available, shutting down

Unable to open logs

[FAILED]

For resolving this issue grep list of open files and kill that file

[root@]# lsof -i :80

COMMAND PID USER FD TYPE DEVICE SIZE NODE NAME

perl 7448 apache 4u IPv6 33113387 TCP *:http (LISTEN)

[root@]# kill -9 7448

[root@]# /etc/init.d/httpd start

Starting httpd: [ OK ]

Wednesday, July 1, 2009

Voice Chat setup on Ubuntu - Using Empathy

Installation

First we will add launchpad repository to get latest binary for ubuntu intrepid and hardy :

Add these lines to your /etc/apt/sources.list :

$sudo gedit /etc/apt/sources.listFor Ubuntu Intrepid :

deb http://ppa.launchpad.net/telepathy/ppa/ubuntu intrepid main

deb-src http://ppa.launchpad.net/telepathy/ppa/ubuntu intrepid main For Ubuntu Hardy :

deb http://ppa.launchpad.net/telepathy/ppa/ubuntu hardy main

deb-src http://ppa.launchpad.net/telepathy/ppa/ubuntu hardy main Now run :

$sudo apt-get updateInstall the required packages and empathy via this command :

$sudo apt-get install empathy telepathy-gabble telepathy-mission-control telepathy-stream-engine telepathy-butterfly python-msnSetUp Empathy

Start Empathy by going Applications –> Internet –> Empathy Instant Messenger or empathy command

use your google talk account here and click Advanced and be sure server is talk.google.com , port 5223, and use old ssl is checked.

Saturday, June 27, 2009

Google Chrome for Linux

Google has also released official builds of Google Chrome for Linux and Mac OS X (see update below).

The Google Browser port, known as Crossover Chromium, is available for download on Mac OS X as a native Mac .dmg file or on Ubuntu, RedHat, Suse, etc. as standard Linux packages.

How to Install Google Browser on Linux

Linux users should use the appropriate tools for their respective Linux distributions to unpack the installer package. Google Chrome on Linux is available for both 32bit and 64bit versions.

If you installed Google Chrome on Linux using the .deb package, you can uninstall the Google Browser using the Synaptic package manager or via the following command - sudo aptitude purge cxchromium

Google Chrome for Mac & Linux - Official Builds

Update: The official builds of Google Chromium are now available for Linux and Mac here. The interface and features of Chromium for Mac OS X are similar to that of Chrome for Windows but it’s a developer release and not very stable yet.

For Ubuntu 9.04 click here

Friday, June 12, 2009

Crontab

This line executes the "ping" command every minute of every hour of every day of every month. The standard output is redirected to dev null so we will get no e-mail but will allow the standard error to be sent as a e-mail. If you want no e-mail ever change the command line to "/sbin/ping -c 1 192.168.2.187 > /dev/null 2>&1".

* * * * * /sbin/ping -c 1 192.168.2.187 > /dev/null

This line executes the "ping" and the "ls" command every 12am and 12pm on the 1st day of every 2nd month. It also puts the output of the commands into the log file /var/log/cronrun.

0 0,12 1 */2 * /sbin/ping -c 192.168.2.187; ls -la >>/var/log/cronrun

This line executes the disk usage command to get the directory sizes every 2am on the 1st thru the 10th of each month. E-mail is sent to the user executing the jobs e-mail address. Remember if your executing this command as root to go into your /etc/aliases file and put your e-mail in there. You could also put a .forward file in root's home dir to forward to another e-mail address.

0 2 1-10 * * du -h --max-depth=1 /

This line is and example of running a cron job every month, on Mondays whose dates are between 15-21. This means the third Monday only of the month at 4 a.m.

0 4 15-21 * 1 /command

Recompilation of Linux Kernel with GRSECURITY

cd /usr/src

wget http://www.kernel.org/pub/linux/kernel/v2.6/linux-2.6.17.11.tar.bz2

wget http://grsecurity.org/grsecurity-2.1.9-2.6.17.11-200608282236.patch.gz

tar -xjvf linux-2.6.17.11.tar.bz2

gunzip < default="0" requirements ="=">&1|grep reiserfsprogs

o xfsprogs 2.6.0 # xfs_db -V

o pcmciautils 004 # pccardctl -V

o quota-tools 3.09 # quota -V

o PPP 2.4.0 # pppd --version

o isdn4k-utils 3.1pre1 # isdnctrl 2>&1|grep version

o nfs-utils 1.0.5 # showmount --version

o procps 3.2.0 # ps --version

o oprofile 0.9 # oprofiled --version

o udev 081 # udevinfo -V

Kernel compilationroot@fast [~/support/linux-2.6.20/Documentation]# vi Changes

Basic tools:

automake

autocnf

binutils

bison

byac

cdecl

dev86

flex

gcc

gcc-c++

gdb

gettex

libtool

make

perl-CPAN

pkgconfig

python-devel

redhat-rpm-config

rpm-build

strace

texinfo

grsecurity

grsecurity is an innovative approach to security utilizing a multi-layered detection, prevention, and containment model. It is licensed under the GPL.

It offers among many other features:

- An intelligent and robust Role-Based Access Control (RBAC) system that can generate least privilege policies for your entire system with no configuration

- Change root (chroot) hardening

- /tmp race prevention

- Extensive auditing

- Prevention of arbitrary code execution, regardless of the technique used (stack smashing, heap corruption, etc)

- Prevention of arbitrary code execution in the kernel

- Randomization of the stack, library, and heap bases

- Kernel stack base randomization

- Protection against exploitable null-pointer dereference bugs in the kernel

- Reduction of the risk of sensitive information being leaked by arbitrary-read kernel bugs

- A restriction that allows a user to only view his/her processes

- Security alerts and audits that contain the IP address of the person causing the alert

Thursday, June 4, 2009

Using Grep Command

The grep command searches files or standard input globally for lines matching a given regular expression, and prints them to the program's standard output.

sahab@sahab-desktop:~$ grep root /etc/passwd

root:x:0:0:root:/root:/bin/bash

sahab@sahab-desktop:~$ grep -n root /etc/passwd

1:root:x:0:0:root:/root:/bin/bash

-n, --line-number

Prefix each line of output with the line number within its input

file.

sahab@sahab-desktop:~$ grep -v bash /etc/passwd | grep -v nologin

daemon:x:1:1:daemon:/usr/sbin:/bin/sh

bin:x:2:2:bin:/bin:/bin/sh

sys:x:3:3:sys:/dev:/bin/sh

sync:x:4:65534:sync:/bin:/bin/sync

games:x:5:60:games:/usr/games:/bin/sh

man:x:6:12:man:/var/cache/man:/bin/sh

v, --invert-match

Invert the sense of matching, to select non-matching lines.

sahab@sahab-desktop:~$ grep -c bash /etc/passwd

4

sahab@sahab-desktop:~$ grep -c nologin /etc/passwd

1

-c, --count

Suppress normal output; instead print a count of matching lines

for each input file. With the -v, --invert-match option (see

below), count non-matching lines.

sahab@sahab-desktop:~$ grep -i ps ~/.bash*

/home/sahab/.bash_history:ps -ax

/home/sahab/.bash_history:tops

/home/sahab/.bash_history:ps -ax | grep -i giis

/home/sahab/.bash_history:ps -ax

/home/sahab/.bashrc:[ -z "$PS1" ] && return

/home/sahab/.bashrc:export HISTCONTROL=$HISTCONTROL$ {HISTCONTROL+,}ignoredups

/home/sahab/.bashrc:# ... or force ignoredups and ignorespace

sahab@sahab-desktop:~$ grep -i ps ~/.bash* | grep -v history

/home/sahab/.bashrc:[ -z "$PS1" ] && return

-i, --ignore-case

Ignore case distinctions in both the PATTERN and the input

files.

We now exclusively want to display lines starting with the string "root":

$ grep -rwl 'ar' /home/sahab/

grep: /home/sahab/.kde/socket-sahab-desktop: No such file or directory

grep: /home/sahab/.kde/tmp-sahab-desktop:

-R, -r, --recursive

Read all files under each directory, recursively; this is equiv-

alent to the -d recurse option.

-w, --word-regexp

Select only those lines containing matches that form whole

words.

-x, --line-regexp

Select only those matches that exactly match the whole line.

-l, --files-with-matches

Suppress normal output; instead print the name of each input

file from which output would normally have been printed. The

scanning will stop on the first match.

$ grep ^root /etc/passwd

root:x:0:0:root:/root:/bin/bash

The caret ^ and the dollar sign $ are meta-characters that respectively

match the empty string at the beginning and end of a line. The symbols

\<> respectively match the empty string at the beginning and end

of a word. The symbol \b matches the empty string at the edge of a

word, and \B matches the empty string provided not at the edge of

a word.

$ grep -w / /etc/fstab

UUID=8d7122c6-6549-4622-83b8-7855ad822edc / ext3 relatime,errors=remount-ro 0

$ grep / /etc/fstab

#/etc/fstab: static file system information.

proc /proc proc defaults 0 0

# /dev/sda6

UUID=8d7122c6-6549-4622-83b8-7855ad822edc / ext3 relatime,errors=remount-ro 0 1

# /dev/sda7

UUID=f9ca09b2-9a7d-472d-9ecd-a8d156737559 /data ext3 relatime 0 2

# /dev/sda3

/dev/scd0 /media/cdrom0 udf,iso9660 user,noauto,exec,utf8 0 0

Here is an example shell command that invokes GNU `grep':

grep -i 'hello.*world' menu.h main.c

This lists all lines in the files `menu.h' and `main.c' that contain the string `hello' followed by the string `world'; this is because `.*' matches zero or more characters within a line. *Note Regular Expressions::. The `-i' option causes `grep' to ignore case, causing it to match the line `Hello, world!', which it would not otherwise match. *Note Invoking::, for more details about how to invoke `grep'.

Here are some common questions and answers about `grep' usage.

1. How can I list just the names of matching files?

grep -l 'main' *.c

lists the names of all C files in the current directory whose

contents mention `main'.

2. How do I search directories recursively?

grep -r 'hello' /home/sahab

searches for `hello' in all files under the directory

`/home/sahab'. For more control of which files are searched, use

`find', `grep' and `xargs'. For example, the following command

searches only C files:

find /home/sahab -name '*.c' -print | xargs grep 'hello' /dev/null

This differs from the command:

grep -r 'hello' *.c

lists the names of all C files in the current directory whose

contents mention `main'.

3. What if a pattern has a leading `-'?

grep -e -cut here- *

searches for all lines matching `--cut here--'. Without `-e',

`grep' would attempt to parse `--cut here--' as a list of options.

4. Suppose I want to search for a whole word, not a part of a word?

grep -w 'hello' *

searches only for instances of `hello' that are entire words; it

does not match `Othello'. For more control, use `\<' and `\>' to

match the start and end of words. For example:

grep 'hello\>' *

searches only for words ending in `hello', so it matches the word

`Othello'.

5. How do I output context around the matching lines?

grep -C 2 'hello' *

prints two lines of context around each matching line.

6. How do I force grep to print the name of the file?

Append `/dev/null':

grep 'test' /etc/passwd /dev/null

gets you:

/etc/passwd:test:x:1002:1002:,,,:/home/test:/bin/bash

7. Why do people use strange regular expressions on `ps' output?

ps -ef | grep '[c]ron'

If the pattern had been written without the square brackets, it

would have matched not only the `ps' output line for `cron', but

also the `ps' output line for `grep'. Note that some platforms

`ps' limit the ouput to the width of the screen, grep does not

have any limit on the length of a line except the available memory.

8. Why does `grep' report "Binary file matches"?

If `grep' listed all matching "lines" from a binary file, it would

probably generate output that is not useful, and it might even

muck up your display. So GNU `grep' suppresses output from files

that appear to be binary files. To force GNU `grep' to output

lines even from files that appear to be binary, use the `-a' or

`--binary-files=text' option. To eliminate the "Binary file

matches" messages, use the `-I' or `--binary-files=without-match'

option.

9. Why doesn't `grep -lv' print nonmatching file names?

`grep -lv' lists the names of all files containing one or more

lines that do not match. To list the names of all files that

contain no matching lines, use the `-L' or `--files-without-match'

option.

10. I can do OR with `|', but what about AND?

grep 'sahab' /etc/motd | grep 'ubuntu,jaunty

finds all lines that contain both `sahab' and 'ubuntu,jaunty'.

11. How can I search in both standard input and in files?

Use the special file name `-':

cat /etc/passwd | grep 'sahab' - /etc/motd

12. How to express palindromes in a regular expression?

It can be done by using the back referecences, for example a

palindrome of 4 chararcters can be written in BRE.

grep -w -e '\(.\)\(.\).\2\1' file

It matches the word "radar" or "civic".

Guglielmo Bondioni proposed a single RE that finds all the

palindromes up to 19 characters long.

egrep -e '^(.?)(.?)(.?)(.?)(.?)(.?)(.?)(.?)(.?).?\9\8\7\6\5\4\3\2\1$' file

Note this is done by using GNU ERE extensions, it might not be

portable on other greps.

Friday, May 29, 2009

Installation of Sun xVM VirtualBox on Ubuntu 8.10 Intrepid Ibex and Ubuntu9.04 Jaunty Jackalope

This tutorial shows how you can install Sun xVM VirtualBox on Ubuntu 8.10 Intrepid lbex desktop and on Ubuntu9.04 Jaunty Jackalope . VirtualBox is similar to Mvware,

This tutorial shows how you can install Sun xVM VirtualBox on Ubuntu 8.10 Intrepid lbex desktop and on Ubuntu9.04 Jaunty Jackalope . VirtualBox is similar to Mvware, Using Virtualbox you can create and run guest operating systems virtual Machines such as Linux and Windows under a host operating system.

- Install Sun Virtualbox on Ubuntu9.04 Jaunty Jackalope

you will have just to use this command :

sudo apt-get install virtualbox

For Ubuntu 8.10 Intrepid Ibex desktop

First we have to add virtualbox repository to our apt installation the 3 line together one after one :

/bin/echo "# VirtualBox repository for Ubuntu 8.10 Intrepid Ibexdeb http://download.virtualbox.org/virtualbox/debian intrepid non-free" \| /usr/bin/sudo /usr/bin/tee /etc/apt/sources.list.d/intrepid-virtualbox.listThen we install the key for this repository :

/usr/bin/wget http://download.virtualbox.org/virtualbox/debian/sun_vbox.asc -O- | sudo apt-key add -Now we have to update our package :

/usr/bin/sudo /usr/bin/apt-get update

At the end install VirtualBox :

/usr/bin/sudo /usr/bin/apt-get install virtualbox-2.0

Now you will find the application on your ubuntu start menu:

Applications > System Tools

To be sure that everything will work good, we will recompile the necessary kernels for this software :

/usr/bin/sudo /etc/init.d/vboxdrv setup

We advice people that has problem to run the application , to use the command above because will force

to lunch the application. Now we will add the users that has permission to use VirtualBox :

/usr/bin/sudo /usr/sbin/adduser $USER vboxusers

If you will use immediately the application, use the commands below /usr/bin/sudo /etc/init.d/udev reload /usr/bin/sudo /sbin/modprobe vboxdrv /bin/su -c /usr/bin/VirtualBox $USER

And is done :)

Now we are ready to install virtual machines on our VirtualBox, below will describe how to use VirtualBox

to install RHEl 5

First open your VirtualBox from Applications > System Tools see pic1

Pic1

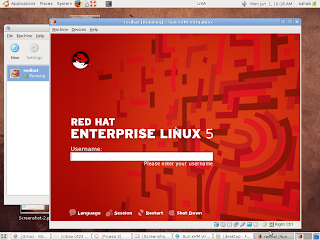

Click next and choose to install RHEL 5, give a name to the virtual machine see pic2 :

Pic2

Next you have to choose how much memory you want to give to RHEL 5 (better shoose standard) see and click next

Pic3

Next you have to create partition for RHEL 5 pic4 (me i did soose 2Go) and click next

Then choose fixed size image (pic4) and click next

and then choose the image and finish.

Now before to click finish insert the cd of Rhel 5 then click on finish, a new screen will ask you

from where you want to install RHEL 5,choose CD/DVD :

Then the installation of RHEL will run automaticaly from the CD/DVD

Now follow the normal installation procedure of RHEL 5

Now RHEL 5 is installed on your Ubuntu Enoy !!!!!!!!!!!!!!!!!

Tuesday, May 19, 2009

Brasero Burner On Ubuntu

Brasero now supports multi session, joilet extension, can write an image to a hard drive, and check disc file integrity for data CDs and DVDs. Features for burning audio CDs include write CD-TEXT information, on-the-fly burning, use all audio files handled by Gstreamer local installation (ogg, flac, mp3, ...), and search for audio files inside dropped folders. Using the Brasero burner CD/DVD copy option you can copy a CD/DVD to the hard drive, copy CD and DVD on the fly, use single-session data DVD, and really supports any type of CD. Other features you'll want to take note of are erase CD/DVD feature, save/load projects, burn CD/DVD images and cue files, song image and video previewer, and device detection, and more.

Here you can see Brasero burner is located under Applications --- Sound & Video --- Brasero Disc Burning on the Ubuntu desktop.

After opening Brasero CD/DVD burning program you can see in the screen shot below four options. Create a traditional audio CD, data CD/DVD, copy CD/DVD, and Burn an image to CD/DVD.

You may be asked to make Brasero burner your default burning application, here you may select yes or no and additionally don't show this dialogue again.

Here we see the data CD creation interface. One nice feature is the many ways that files can be added to the window on the right especially drag and drop to add or remove files.

When you've added all of the data files you want, select the burn button.

Now just specify where you're burning to, how many copies you want, your discs label, and other options. Click burn and you're done.

Tomcat6 Installation on Ubuntu

If you are running Ubuntu and want to use the Tomcat servlet container, you should not use the version from the repositories as it just doesn't work correctly. Instead you'll need to use the manual installation process that I'm outlining here.Before you install Tomcat you'll want to make sure that you've installed Java. I would assume if you are trying to install Tomcat you've already installed java, but if you aren't sure you can check with the dpkg command like so:

If you are running Ubuntu and want to use the Tomcat servlet container, you should not use the version from the repositories as it just doesn't work correctly. Instead you'll need to use the manual installation process that I'm outlining here.Before you install Tomcat you'll want to make sure that you've installed Java. I would assume if you are trying to install Tomcat you've already installed java, but if you aren't sure you can check with the dpkg command like so: dpkg –get-selections | grep sun-java

This should give you this output if you already installed java:

install sun-java6-bin

install sun-java6-jdk

install sun-java6-jre

If that command has no results, you'll want to install the latest version with this command:

sudo apt-get install sun-java6-jdk

Installation

Now we'll download and extract Tomcat from the apache site. You should check to make sure there's not another version and adjust accordingly.

wget http://www.ibiblio.org/pub/mirrors/apache/tomcat/tomcat-6/v6.0.18/bin/apache-tomcat-6.0.18.tar.gz

Then

tar xvzf apache-tomcat-6.0.14.tar.gz

The best thing to do is move the tomcat folder to a permanent location. I chose /usr/local/tomcat, but you could move it somewhere else if you wanted to.

sudo mv apache-tomcat-6.0.14 /usr/local/tomcat

Tomcat requires setting the JAVA_HOME variable. The best way to do this is to set it in your .bashrc file. You could also edit your startup.sh file if you so chose.

The better method is editing your .bashrc file and adding the bolded line there. You'll have to logout of the shell for the change to take effect.

nano ~/.bashrc

Add the following line:

export JAVA_HOME=/usr/lib/jvm/java-6-sun

At this point you can start tomcat by just executing the startup.sh script in the tomcat/bin folder.

Automatic Starting

To make tomcat automatically start when we boot up the computer, you can add a script to make it auto-start and shutdown.

sudo nano /etc/init.d/tomcat6

Now paste in the following:

# Tomcat auto-start

#

# description: Auto-starts tomcat

# processname: tomcat

# pidfile: /var/run/tomcat.pidexport JAVA_HOME=/usr/lib/jvm/java-6-sun

case $1 in

start)

sh /usr/local/tomcat/bin/startup.sh

;;

stop)

sh /usr/local/tomcat/bin/shutdown.sh

;;

restart)

sh /usr/local/tomcat/bin/shutdown.sh

sh /usr/local/tomcat/bin/startup.sh

;;

esac

exit 0

You'll need to make the script executable by running the chmod command:

sudo chmod 755 /etc/init.d/tomcat6

The last step is actually linking this script to the startup folders with a symbolic link. Execute these two commands and we should be on our way.

sudo ln -s /etc/init.d/tomcat /etc/rc1.d/K99tomcat6

sudo ln -s /etc/init.d/tomcat /etc/rc2.d/S99tomcat6

Features of Ubuntu 9.04 Server Edition

The new version of Ubuntu 9.04 is out and users are looking forward to use it. Here are some of the features that you can expect in Ubuntu 9.04 Server Edition.

1. Ubuntu 9.04 server edition has extended hardware compatibility that ensures it easier for users to deploy it for their needs.

2. OEMs can now pre-install their own default first-boot configuration of Ubuntu Server Edition. This configuration can be replicated across all the Ubuntu servers in their organization.

3. Ubuntu 9.04 server edition on Amazon EC2 makes it happen for businesses to deploy services to external clouds.

4. Ubuntu 9.04 server edition comes with new mail server features that include shared user authentication and enhanced spam protection.

5. Ubuntu 9.04 server edition encourages interoperability by integrating OpenChange and Microsoft Exchange.

For more details about Ubuntu 9.04 server edition, click this link.

How to Install OpenOffice 3.0 on Ubuntu 8.10

Below is the how to install OpenOffice 3.0 on Ubuntu 8.10 (Intrepid Ibex).

1. Go to System -> Administration -> Software Sources

2.Go to the second tab, "Third-Party Software," click on the "Add" button, and paste the line below.

deb http://ppa.launchpad.net/openoffice-pkgs/ubuntu intrepid main

3. Then, click the “Close” button, then the “Reload” one and wait for the application to close!

4. When the Software Sources window will close itself, the update icon will appear in the system tray

5. Click on it and update your system!. Your open source office suite will be up-to-date from now on. Take a look below for some shots of OpenOffice.org 3.0 in Ubuntu 8.10 (Intrepid Ibex).

Friday, May 15, 2009

Some Usefull scripts

A nice script to find out the no of connection from a particular IP address to the apache

root@sahab-desktop# netstat -n|grep :80|awk {'print $5'}|awk -F: {'print $1'}>netlist;for i in `sort -u netlist` ;do echo -n$i"->";grep -c $i netlist; done;How to remove frozen messages from mail queue?(exim)

This command will help you to remove the frozen messages from the mail queue.exim -bp|grep '*** frozen ***' |awk {'print $3'} |xargs exim -Mrm

Command to find all of the files which have been accessed within the last 10 days

The following command will find all of the files which have been accessed within the last 10 daysfind / -type f -atime -10 > Monthname.files

This command will find all the files under root, which is ‘/’, with file type is file. ‘-atime -30′ will give all the files accessed less than 10 days ago. And the output will put into a file call Monthname.files.

Find and Remove the editor backup files

find . -name '*~' -type f -printfind . -name '*~' -type f -print |xargs rm -f

Wednesday, May 13, 2009

Team Viewer on ubuntu

Hi

TeamViewer establishes connections to any PC or server all around the world within just a few seconds. It work perfect on windows OS. But I have tried it for ubuntu but no .deb packages available. So I used wine for running this application on ubuntu.

Installing Wine Run in terminal

sudo apt-get install wine

Download the team viewer .exe

Select and right click the TeamViewer_setup.exe, there select "open with wine windows program Loader", then follow as-usual steps for installation.

Now the team viewer is ready to use as you like.

Thursday, May 7, 2009

Run a Command without SUDO password - Ubuntu

Today I have tried my client PC run shutdown and reboot command without being enter sudo password. The details given below

Use the command "sudo visudo" to edit the file. Now look for the line "# User alias specification" and add a list of users as follows:#

User alias specification

User_Alias USERS = user1, user2, user3

# Cmnd alias specification

Cmnd_Alias SHUTDOWN_CMDS = /sbin/shutdown, /sbin/reboot, /sbin/halt

:%admin ALL=(ALL) ALL

USERS ALL=(ALL) NOPASSWD: SHUTDOWN_CMDS

EDIT: By the way, the reason that these commands can't be used by normal users is that Linux was designed as a multi-user system. You don't want one user shutting down the system while others are using it!

Monday, May 4, 2009

How to Effectively Use Your Bandwidth

To Readers: All those starting with # are run by root user and ;'s are comments inside the configuration files

We are going to use the delay_pools TAG in squid.

Before going straight into the configuration, I would like to write some theory.

What exactly are delay pools?

They are simply pools which make a delayed response.

They are essentially bandwidth buckets!

Some of you might have quizzically raised your eyebrows when you read buckets, I know! I too was very much confused about this bucket concept! But I think I can clarify the whole concept for you!

Imagine bandwidth bucket has a normal plastic bucket used to storing water! Instead of water these buckets store bandwidth! Initially it will be full! Initially means when no one is using your bandwidth. When a user requests a page, he will get the respone only if theres enough bandwidth available from the bucket he is using. Bucket actually stores traffic! Bandwidth is expressed in terms of how much data is available in one second, like 1Mb/s (1Mbps)

Traffic is expressed in terms of total data, like 1MB.

Size of bucket determines how much bandwidth is available to a client(s). If a bucket starts out full, a client can take as much traffic as it needs until the bucket becomes empty. Client then recieves bucket allotment at the 'fill rate'. (I will tell about the fill rate later, just remember that word in mind).

There are three types of delay pools.

Class 1 => Single aggregate bucket (Totally shared among the members of the bucket)

Class 2 => To understand it better, assume its applied to Class C networks.

Theres one bucket for each network and 256 individual buckets for each ips of every network. Size of individual bucket cannot exceed the network bucket!

Class 3 => One aggregate bucket, 256 network buckets, 65536 individual buckets. (Class B networks)

Now into configuration,

Firstly we need to define how many delay pools we are doing to declare.

delay_pools 2

This means that we have two delay pools.

delay_class 1 3

This means that the first pool is a class 3 pool (Class B networks)

delay_class 2 1

This means that the second pool is a class 1 pool (Single aggregate bucket)

For each pool we should have a delay_class line.

Now we need to define each pools parameters, like the capacity of each pool and fill rate.

delay_parameters 1 7000/15000 3000/4000 1000/2000

this is delay pool parameters for the pool 1

Pool 1 was a class 3 pool. Class 3 pool has 3 buckets, one aggregate bucket, one for 256 networks and one for 65536 individual ips!

delay_parameters 2 2000/8000

The second pool of type class 1. Class 1 has only one aggregate bucket!

Now whats this 2000/8000?

Each bucket is recognized by its rate/size

Here 8000 means that the maximum capacity of the bucket!

And it refills at the rate of 2000 bytes/second

This means that if the bucket is empty, it takes 4 seconds for the bucket to get full if no clients are accessing it!

If you find a declaration like this,

delay_paramters 2 -1/-1

This means theres no limitation to the bucket!

Now lets take an example.

Our ISP connection is 12Mbps and we want our machines to have a maximum of 4 Mbps at peak time.

The rest we dedicate for SMTP or other production servers. We are going to define only one delay pool of class 1

What is actually 12Mbps?

1Mbps = 1 Megabits per second => 1/8 Megabytes per second (8 bits = 1 byte)

1/8 Megabytes per second => 1/8 * 1024 Kilobytes per second => 128Kilobytes per second => 128KBps

so 1 Mbps => 128 KBps

so 12 Mbps => 128 * 12 = 1536 KBps => 1.5 MBps

To sum up

so 12 Mbps = 12/8 MBps => 1.5 MBps

So with this ISP connection we can download a 6 MB file in 4 seconds!

So here the maximum bandwidth available to machines must be 4 Mbps only! (4Mbps ~ 0.5 MBps ~ 512 KBps)

delay_pools 1

delay_class 1 1

delay_parameters 1 524288/1048576

524288 => 524288/1024 KB => 512 KB => 512/1024 MB => 0.5 MB => 0.5*8 => 4Mb

1048576 => 1048576/1024 KB => 1024 KB => 1 MB

Initially the bucket will be full (1 MB traffic). Now a client makes a request to download a 5 MB file.

It will get the maximum speed(12 Mbps) until it downloads 1 MB, but after that it gets only 0.5 MBps

For 1 MB, it takes 1 second as full bucket is available at first. As the bucket drains, it fills at the rate of 0.5 MBps only.

So 0.5 MBps will only be available after 1 MB has been downloaded!

So the file will get downloaded in 9 seconds. (This is all in theory :P)

There another TAG associated with delay_pools.

delay_initial_bucket_level => this parametes expects a value in percentage(%)

This parameter specifies how much bandwidth is put in each bucket when squid service starts.

By default, the value will be 50%, which means that in the previous example, the client will

download at full speed till the download reaches 0.5 MB

eg:

acl throttled src 10.0.0.1-10.0.0.50

delay_pools 1

delay_class 1 1

delay_parameters 1 524288/1048576

delay_access 1 allow throttled

Note: delay_access is very similar to http_access. It determines which delay pool a request falls into!

Hope this was useful for you!

Friday, May 1, 2009

Review and Future of Ubuntu Jaunty 9.04

Jaunty is very good. Its the first version of a linux distribution that I'd pretty much recommend to anyone to try (with only a few minor caveats about possible hardware issues and the fact that Linux users still can't watch netflix). It has made vast improvements over Hardy from a year ago. Its stable, snappy, and quite a number of annoyances that I onced experienced have been fixed or minimized. Below I go through these in more detail. Now, though I want to focus on what I hope for the next version of Ubuntu (codenamed "Karmic Koala").

Cloud computing is the wave of the future, so I'm very happy this development cycle is making this a focus. But I'm doubtful they will go far enough to really make a lot of difference for most people. What people want now is to be able to sync up there data with various computers they possess and with online services they rely on. To this effect:

Evolution needs to sync seamlessly with Google calander, email, and contacts.

Evolution also needs to sync seamlessly with services like "Remember the Milk". Tasque does this ok presently on Linux, but it has a way to go to be really polished

Tomboy needs to be able to sync across multiple computers. Yes, there is the beginning of such functionality already built into it, but its hard to set up, and even when it is, it doesn't sync automatically.

Thank god for Dropbox, an excellent program that should be further promoted

Certain features need to be implimented consistantly:

I really like the new notification system, but programs like Firefox and Amarok have not been made to use this system.

Pulseaudio is MUCH better than it was a year ago, but it still causes problems on occasion. Hammer out the rest of the details so we can move on!

Wireless connectivity has also improved greatly, but I still sometimes have problems connecting (or dropping) when those people around me on a Mac or Windows have no problem. On occasion I've had to abandon Network Manager altogether for WICD, which sometimes works when the former doesn't

Either switch to better programs, or put money towards the impovement of certain obvious deficiencies.

Its time to give up on Rhythmbox, Banshee is fast outgrowing the maintainance only iterations of Rhythmbox. That said, if Banshee doesn't include a watch folders option in the next release, I will totally take back this claim.

Tracker Search Tool is my least favorite program on Ubuntu. It sucks so bad it hurts. But Beagle is a memory hog, so at present there doesn't seem like a good search tool on linux (esp one with tens of thousands of files like mine).

Evolution is not a pleasure to use. I'm waiting for Thunderbird 3 to come out and put real pressure on Evolution developers to make it a better program. It needs to seamlessly integrate with online services. A makeover is really overdue as well.

Multimedia editing is slowly progressing overall. A year ago I couldn't find a video editor I liked, whereas now I'm quite a fan of Kdenlive. Audacity didn't work at all, but now does. Keep up the good work here!

Reviewing Previous Annoyances

A GUI wrapper for utf: gutf is pretty good except that I still haven't figured out how to set up internet connection sharing with it. But Firestarter works fine, so I'm going to mark this one off the list.

Two-way synchronization between evolution and Google Calander: Calander synchronization is there, but I don't quite trust it until its been tested and advertised by developers.

Advanced Desktop settings already pre-installed (or some simplified version of it). NO. Still an annoyance, but I've gotten bored enough with configuring it that I no longer do

Tracker Search Tool needs to have a phrase search. NO. HAHA, tracker isn't even included in Jaunty. And that is for the better. Tracker Search Tool still sucks ass.

Bug fixes and Feature Requests:

nautilus 'replace file' dialog box could give more information. No. THIS ONE ANNOYS ME MORE THAN ANYTHING. I want to stop using nautilus because of this. A LONG, LONG standing missing functionality

Tracker doesn't search for phrases in enclosed quotes. Still shit, as I mentioned above

Audacity does not mesh with PulseAudio. YES - it works! Thanks for the good work

What I want to see included in the next iteration of Ubuntu:

OpenOffice 3.1 - This should be there. Every major improvement of OOo is an important step, though I wonder when they will finally get to beautifying the program and optimizing the speed

Firefox 3.1 - This should be ready I believe (though I'm less than certain) - it should be a pretty big improvement.

Hopefully most of the rest of KDE applications will be ported to QT 4. I'm looking at you: Quanta Plus and K3B

Amarok 2.1 - Amarok 2.0 was somewhat of a disappointment, but it did leave lots of room for potential. Version 2.1 is the first major step to actualizing that potential, it looks like a very good improvement

Songbird 1.2 - Until it makes it into the Repos, I'm not holding my breath

Moonlight 2.0 - I doubt it will be ready in time, but when this comes out it will be huge. There will be no reason we cannot finally get Netflix on Linux.

Last time I evalutated Ubuntu, I lemented that there wasn't a decent ebook manager out there for linux (eKitaab still doesn't work). But now there is a program that is in heavily development that looks really good called calibre. It looks to become one of the best cross-platform ebook managers around. Kudos to the developers.

Wednesday, April 29, 2009

Linux Security Tips and Tricks

BIOS Security

Always set a password on BIOS to disallow booting from floppy by changing the BIOS settings. This will block undesired people from trying to boot your Linux system with a special boot disk and will protect you from people trying to change BIOS feature like allowing boot from floppy drive or booting the server without password prompt.

Grub Security

One thing which could be a security hole is that the user can do too many things with GRUB, because GRUB allows one to modify its configuration and run arbitrary commands at run-time. For example, the user can even read /etc/passwd in the command-line interface by the command cat (see cat). So it is necessary to disable all the interactive operations. and enter a correct password. The option --md5 tells GRUB that `PASSWORD' is in MD5 format. If it is omitted, GRUB assumes the `PASSWORD' is in clear text.

Thus, GRUB provides a password feature, so that only administrators can start the interactive operations (i.e. editing menu entries and entering the command-line interface). To use this feature, you need to run the command password in your configuration file.

password --md5 PASSWORD

If this is specified, GRUB disallows any interactive control, until you press the key

You can encrypt your password with the command md5crypt For example, run the grub shell (see Invoking the grub shell), and enter your password:

grub> md5crypt

Password: **********

Encrypted: $1$U$JK7xFegdxWH6VuppCUSIb.

Then, cut and paste the encrypted password to your configuration file.

Also, you can specify an optional argument to password. See this example:

password PASSWORD /boot/grub/menu-admin.lst

In this case, GRUB will load /boot/grub/menu-admin.lst as a configuration file when you enter the valid password.

Another thing which may be dangerous is that any user can choose any menu entry. Usually, this wouldn't be problematic, but you might want to permit only administrators to run some of your menu entries, such as an entry for booting an insecure OS like DOS.

GRUB provides the command lock. This command always fails until you enter the valid password, so you can use it, like this:

title Boot DOS

lock

rootnoverify (hd0,1)

makeactive

chainload +1

You should insert lock right after title, because any user can execute commands in an entry until GRUB encounters lock.

You can also use the command password instead of lock. In this case the boot process will ask for the password and stop if it was entered incorrectly. Since the password takes its own PASSWORD argument this is useful if you want different passwords for different entries

LILO Security

Add the three parameters in "/etc/lilo.conf" file i.e. time-out, restricted and password. These options will ask for password if boot time options (such as "linux single") are passed to the boot loader.

Step 1

Edit the lilo.conf file (vi /etc/lilo.conf) and add or change the three options :

boot=/dev/hda

map=/boot/map

install=/boot/boot.b

time-out=00 #change this line to 00

prompt

Default=linux

restricted #add this line

password=

image=/boot/vmlinuz-2.2.14-12

label=linux

initrd=/boot/initrd-2.2.14-12.img

root=/dev/hda6

read-only

Step 2

The "/etc/lilo.conf" file should be readable by only root because it contains unencrypted passwords.

[root@sahab-desktop /]# chmod 600 /etc/lilo.conf (will be no longer world readable).

Step 3

Update your configuration file "/etc/lilo.conf" for the change to take effect.

root@sahab-desktop /# /sbin/lilo -v (to update the lilo.conf file).

Step 4

One more security measure you can take to secure the "/etc/lilo.conf" file is to set it immutable, using the chattr command.

* To set the file immutable simply, use the command:

root@sahab-desktop /# chattr +i /etc/lilo.conf

This will prevent any changes (accidental or otherwise) to the "lilo.conf" file.

Disable all special accounts

You should delete all default users and group accounts that you don't use on your system like lp, sync, shutdown, halt, news, uucp, operator, games, gopher etc

To delete a user account :

root@sahab-desktop# userdel LP

To delete a group:

root@sahab-desktop# groupdel LP

Choose a Right password

The password Length: The minimum acceptable password length by default when you install your Linux system is 5. This is not enough and must be 8. To do this you have to edit the login.defs file (vi /etc/login.defs) and change the line that read:

- PASS_MIN_LEN 5

To read:

PASS_MIN_LEN 8

The "login.defs" is the configuration file for the login program.

You should enable the shadow password feature. You can use the "/usr/sbin/authconfig" utility to enable the shadow password feature on your system. If you want to convert the existing passwords and group on your system to shadow passwords and groups then you can use the commands pwconv, grpconv respectively.

The root account

The "root" account is the most privileged account on a Unix system. When the administrator forgot to logout from the system root prompt before leaving the system then the system should automatically logout from the shell. To do that, you must set the special variable of Linux named "TMOUT" to the time in seconds.

Edit your profile file "vi /etc/profile" and add the following line somewhere after the line that read

"HISTFILESIZE="

TMOUT=3600

The value we enter for the variable "TMOUT=" is in second and represent 1 hours (60 * 60 =

3600 seconds). If you put this line in your "/etc/profile" file, then the automatic logout after one hour of inactivity will apply for all users on the system. You can set this variable in user's individual ".bashrc " file to automatically logout them after a certain time.

After this parameter has been set on your system, you must logout and login again (as root) for the change to take effect.

Disable all console-equivalent access for regular users

You should disable all console-equivalent access to programs like shutdown, reboot, and halt for regular users on your server.

To do this, run the following command:

root@sahab-desktop# rm -f /etc/security/console.apps/

Where

Disable & uninstall all unused services

You should disable and uninstall all services that you do not use so that you have one less thing to worry about. Look at your "/etc/inetd.conf" file and disable what you do not need by commenting them out (by adding a # at the beginning of the line), and then sending your inetd process a SIGHUP command to update it to the current "inetd.conf" file. To do this:

Step 1

Change the permissions on "/etc/inetd.conf" file to 600, so that only root can read or write to it.

root@sahab-desktop# chmod 600 /etc/inetd.conf

Step 2

ENSURE that the owner of the file "/etc/inetd.conf" is root.

Step 3

Edit the inetd.conf file (vi /etc/inetd.conf) and disable the services like:

ftp, telnet, shell, login, exec, talk, ntalk, imap, pop-2, pop-3, finger, auth, etc unless you plan to use it. If it's turned off it's much less of a risk.

Step 4

Send a HUP signal to your inetd process

root@sahab-desktop# killall -HUP inetd

Step 5

Set "/etc/inetd.conf" file immutable, using the chattr command so that nobody can modify that file

* To set the file immutable simply, execute the following command:

root@sahab-desktop# chattr +i /etc/inetd.conf

This will prevent any changes (accidental or otherwise) to the "inetd.conf" file. The only person that can set or clear this attribute is the super-user root. To modify the inetd.conf file you will need to unset the immutable flag:

* To unset the immutable simply, execute the following command:

root@sahab-desktop# chattr -i /etc/inetd.conf

TCP_WRAPPERS

By using TCP_WRAPPERS you can make your server secure against outside intrusion . The best policy is to deny all hosts by putting "ALL: ALL@ALL, PARANOID" in the "/etc/hosts.deny" file and then explicitly list trusted hosts who are allowed to your machine in the "/etc/hosts.allow" file. TCP_WRAPPERS is controlled from two files and the search stops at the first match.

/etc/hosts.allow

/etc/hosts.deny

Step 1

Edit the hosts.deny file (vi /etc/hosts.deny) and add the following lines:

# Deny access to everyone.

ALL: ALL@ALL, PARANOID

Which means all services, all locations is blocked, unless they are permitted access by entries in the allow file.

Step 2

Edit the hosts.allow file (vi /etc/hosts.allow) and add for example, the following line:

As an example:

ftp: 34.14.15.99 test.com

For your client machine: 34.14.15.99 is the IP address and test.com the host name of one of your client allowed using ftp.

Step 3

The tcpdchk program is the tcpd wrapper configuration checker. It examines your tcp wrapper configuration and reports all potential and real problems it can find.

* After your configuration is done, run the program tcpdchk.

root@sahab-desktop# tcpdchk

Don't let system issue file to be displayed

You should not display your system issue file when people log in remotely . To do this, you can

change the telnet option in your "/etc/inetd.conf".

To do this change the line in "/etc/inetd.conf":

telnet stream tcp nowait root /usr/sbin/tcpd in.telnetd

to look like:

telnet stream tcp nowait root /usr/sbin/tcpd in.telnetd -h

Adding the "-h" flag on the end will cause the daemon to not display any system information and just hit the user with a login: prompt. I will recommend to use sshd instead.

Change the "/etc/host.conf" file

The "/etc/host.conf" file specifies how names are resolved.

Edit the host.conf file (vi /etc/host.conf) and add the following lines:

# Lookup names via DNS first then fall back to /etc/hosts.

order bind,hosts

# We have machines with multiple IP addresses.

multi on

# Check for IP address spoofing.

nospoof on

The first option is to resolve the host name through DNS first and then hosts file.The multi option determines whether a host in the "/etc/hosts" file can have multiple IP addresses (multiple interface ethN).

The nospoof option indicates to take care of not permitting spoofing on this machine.

Immunize the "/etc/services" file

You must immunize the "/etc/services" file to prevent unauthorized deletion or addition of services.

* To immunize the "/etc/services" file, use the command:

root@sahab-desktop# chattr +i /etc/services

Disallow root login from different consoles

The "/etc/securetty" file allows you to specify which TTY devices the "root" user is allowed to login . Edit the "/etc/securetty" file to disable any tty that you do not need by commenting them out (# at the beginning of the line).

Blocking anyone to su to root

The su (Substitute User) command allows you to become other existing users on the system. If you don't want anyone to su to root or restrict "su" command to certain users then add the following two lines to the top of your "su" configuration file in the "/etc/pam.d/" directory.

Step 1

Edit the su file (vi /etc/pam.d/su) and add the following two lines to the top of the file:

auth sufficient /lib/security/pam_rootok.so debug

auth required /lib/security/Pam_wheel.so group=wheel

Which means only members of the "wheel" group can su to root; it also includes logging. You can add the users to the group wheel so that only those users will be allowed to su as root.

Shell logging

The bash shell stores up to 500 old commands in the "~/.bash_history" file (where "~/" is your home directory) to make it easy for you to repeat long commands. Each user that has an account on the system will have this file "Bash_history" in their home directory. The bash shell should store less number of commands and delete it on logout of the user.

Step 1

The HISTFILESIZE and HISTSIZE lines in the "/etc/profile" file determine the size of old commands the "Bash_history" file for all users on your system can hold. I would highly recommend setting the HISTFILESIZE and HISTSIZE in "/etc/profile" file to a low value such as 30.

Edit the profile file (vi /etc/profile) and change the lines to:

HISTFILESIZE=30

HISTSIZE=30

Which mean, the "Bash_history" file in each users home directory can store 20 old commands

and no more.

Step 2

The administrator should also add into the "/etc/skel/Bash_logout" file the

"rm -f $HOME/Bash_history" line, so that each time a user logs out, its "Bash_history" file will be deleted.

Edit the Bash_logout file (vi /etc/skel/Bash_logout) and add the following line:

rm -f $HOME/Bash_history

Disable the Control-Alt-Delete keyboard shutdown command

To do this comment out the line (with a "#") listed below in your "/etc/inittab" file .

To do this, edit the inittab file (vi /etc/inittab) and change the line:

ca::ctrlaltdel:/sbin/shutdown -t3 -r now

To read:

#ca::ctrlaltdel:/sbin/shutdown -t3 -r now

Now, for the change to take effect type in the following at a prompt:

[root@kapil /]# /sbin/init q

Fix the permissions under "/etc/rc.d/init.d" directory for script files

Fix the permissions of the script files that are responsible for starting and stopping all your normal processes that need to run at boot time. To do this:

root@sahab-desktop# chmod -R 700 /etc/rc.d/init.d/*

Which means only root is allowed to Read, Write, and Execute scripts files on this directory.

Hide your system information

By default, when you login to a Linux box, it tells you the Linux distribution name, version, kernel version, and the name of the server. This is sufficient information for a crackers to get information about your server. You should just prompt users with a "Login:" prompt.

Step 1

To do this, Edit the "/etc/rc.d/rc.local" file and Place "#" in front of the following lines as shown:

# This will overwrite /etc/issue at every boot. So, make any changes you

# want to make to /etc/issue here or you will lose them when you reboot.

#echo "" > /etc/issue

#echo "$R" >> /etc/issue

#echo "Kernel $(uname -r) on $a $(uname -m)" >> /etc/issue

#

#cp -f /etc/issue /etc/issue.net

#echo >> /etc/issue

Step 2

Then, remove the following files: "issue.net" and "issue" under "/etc" directory:

[root@kapil /]# rm -f /etc/issue

[root@kapil /]# rm -f /etc/issue.net

Disable unused SUID/SGID programs

A regular user will be able to run a program as root if it is set to SUID root. A system administrator should minimize the use of these SUID/GUID programs and disable the programs which are not needed.

Step 1

* To find all files with the `s' bits from root-owned programs, use the command:

root@sahab-desktop# find / -type f \( -perm -04000 -o -perm -02000 \) \-exec ls lg {} \;

* To disable the suid bits on selected programs above, type the following commands:

root@sahab-desktop# chmod a-s [program]

Tuesday, April 28, 2009

Segmentation Fault Unable to Login the System - Ubuntu 8.10

Yesterday I face a new issue with ubuntu.....

I have tried to add a printer via samba, that time I got some cupsys related error. Then I restart the service "cupsys" that time I go the error "Segmentation Fault". After that I restart the system.

In that moment I can't able to login using my username and password, it would just bounce to the Login screen again. I have add new user and try root user in command line terminal. there also same issue.

For resolve the issue I have reboot the system and login to the recovery mode and there select drop to root shell prompt. There I have give the command

root@sahab-desktop:~#login sahab

segmentation fault

There also I can't able to login to my username.

After that I have purge samab for resolving the issue

root@sahab-desktop:~#apt-get purge -y samba samba-common samba-client libpam-smbpass

Monday, April 27, 2009

Automatic login with ssh without a password - Linux

Install a ssh client

sudo apt-get install ssh-

Login to the ssh server with your client.

-

Generate your key pair using the following command: (Don't use any passphrase)

sahab@xxxx:~$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/sahab/.ssh/id_rsa):

Created directory '/home/sahab/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/sahab/.ssh/id_rsa.

Your public key has been saved in /home/sahab/.ssh/id_rsa.pub.

The key fingerprint is:

2a:23:a3:f8:6c:af:3f:7e:12:b4:6a:80:98:c0:f3:ea sahab@gis

The key's randomart image is:

+--[ RSA 2048]----+

| |

| |

|. |

|.o . |

|+.o. . S |

|= .o . |

| .+.o.. |

|.++ooo. |

|+E+=++ |

+-----------------+

sahab@xxx:~$ cd ~/.ssh

sahab@xxx:~/.ssh$ cat id_rsa.pub >> authorized_keys

sahab@xxx~/.ssh$ chmod 600 authorized_keys -

Log out of the server and go back to your local shell

$ cd

$ cd .ssh -

Copy the file id_rsa that was generated on the server into this directory. You can use sftp or scp for this.

/home/sahab/.ssh/

$ cd .ssh

$ chmod 600 id_rsa

You should now be able to login via ssh without having to prompt for a password.

Sunday, April 26, 2009

Backup and restore your system - Linux

During that time you might have wondered why it wasn't possible to just add the whole c:\ to a big zip-file. This is impossible because in Windows, there are lots of files you can't copy or overwrite while they are being used, and therefore you needed specialized software to handle this.

Well, I'm here to tell you that those things, just like rebooting, are Windows CrazyThings (tm). There's no need to use programs like Ghost to create backups of your Ubuntu system (or any Linux system, for that matter). In fact; using Ghost might be a very bad idea if you are using anything but ext2. Ext3, the default Ubuntu partition, is seen by Ghost as a damaged ext2 partition and does a very good job at screwing up your data.

1: Backing-up

"What should I use to backup my system then?" might you ask. Easy; the same thing you use to backup/compress everything else; TAR. Unlike Windows, Linux doesn't restrict root access to anything, so you can just throw every single file on a partition in a TAR file!

To do this, become root with

sudo su

cd /

tar cvpzf backup.tgz --exclude=/proc --exclude=/lost+found --exclude=/backup.tgz --exclude=/mnt --exclude=/sys /

The 'tar' part is, obviously, the program we're going to use.

'cvpfz' are the options we give to tar, like 'create archive' (obviously),

'preserve permissions'(to keep the same permissions on everything the same), and 'gzip' to keep the size down.

Next, the name the archive is going to get. backup.tgz in our example.

Next comes the root of the directory we want to backup. Since we want to backup everything; /

Now come the directories we want to exclude. We don't want to backup everything since some dirs aren't very useful to include. Also make sure you don't include the file itself, or else you'll get weird results.

You might also not want to include the /mnt folder if you have other partitions mounted there or you'll end up backing those up too. Also make sure you don't have anything mounted in /media (i.e. don't have any cd's or removable media mounted). Either that or exclude /media.

EDIT : kvidell suggests below we also exclude the /dev directory. I have other evidence that says it is very unwise to do so though.

Well, if the command agrees with you, hit enter (or return, whatever) and sit back&relax. This might take a while.

Afterwards you'll have a file called backup.tgz in the root of your filessytem, which is probably pretty large. Now you can burn it to DVD or move it to another machine, whatever you like!

EDIT2:

At the end of the process you might get a message along the lines of 'tar: Error exit delayed from previous errors' or something, but in most cases you can just ignore that.

Alternatively, you can use Bzip2 to compress your backup. This means higher compression but lower speed. If compression is important to you, just substitute

the 'z' in the command with 'j', and give the backup the right extension.

That would make the command look like this:

tar cvpjf backup.tar.bz2 --exclude=/proc --exclude=/lost+found --exclude=/backup.tar.bz2 --exclude=/mnt --exclude=/sys /

Warning: Please, for goodness sake, be careful here. If you don't understand what you are doing here you might end up overwriting stuff that is important to you, so please take care!

Well, we'll just continue with our example from the previous chapter; the file backup.tgz in the root of the partition.

Once again, make sure you are root and that you and the backup file are in the root of the filesystem.

One of the beautiful things of Linux is that This'll work even on a running system; no need to screw around with boot-cd's or anything. Of course, if you've rendered your system unbootable you might have no choice but to use a live-cd, but the results are the same. You can even remove every single file of a Linux system while it is running with one command. I'm not giving you that command though!

Well, back on-topic.

This is the command that I would use:

tar xvpfz backup.tgz -C /

tar xvpfj backup.tar.bz2 -C /

Just hit enter/return/your brother/whatever and watch the fireworks. Again, this might take a while. When it is done, you have a fully restored Ubuntu system! Just make sure that, before you do anything else, you re-create the directories you excluded:

mkdir proc

mkdir lost+found

mkdir mnt

mkdir sys

etc...

2.1: GRUB restore

Now, if you want to move your system to a new harddisk or if you did something nasty to your GRUB (like, say, install Windows), You'll also need to reinstall GRUB.

For restoring the grub click here

Vi Editor How To

Using the vi editor

To insert new text

esc i ( You have to press 'escape' key then 'i')

To save file

esc : w (Press 'escape' key then 'colon' and finally 'w')

To save file with file name (save as)

esc :w "filename"

To quit the vi editor

esc :q

To quit without saving

esc :q!

To save and quit vi editor

esc :wq

To search for specified word in forward direction

esc /word (Press 'escape' key, type /word-to-find,)

To continue with search "n"

To search for specified word in backward direction

esc ?word (Press 'escape' key, type word-to-find)

To copy the line where cursor is located

esc yy

To paste the text just deleted or copied at the cursor

esc p

To delete entire line where cursor is located

esc dd

To delete word from cursor position

esc dw

To Find all occurrence of given word and Replace then globally without confirmation

esc :$s/word-to-find/word-to-replace/g

For. e.g. :$s/sahab/sahabcse/g

Here word "sahab" is replace with "sahabcse"

To Find all occurrence of given word and Replace then globally with confirmation

esc :$s/word-to-find/word-to-replace/cg

To run shell command like ls, cp or date etc within vi

esc :!shell-command

For e.g. :!pwd

Saturday, April 25, 2009

zimbra cluster configuration - Redhat

Zimbra Cluster notes.

To start the Red Hat Cluster Service on a member, type the following commands in this order. Remember to enter the command on each node before proceeding to the next command.

1. service ccsd start. This is the cluster configuration system daemon that synchronizes configuration between cluster nodes.

2. service cman start. This is the cluster heartbeat daemon. It returns when both nodes have established heartbeat with one another.

3. service fenced start. This is the cluster I/O fencing system that allows cluster nodes to reboot a failed node during failover.

4. service rgmanager start. This manages cluster services and resources. The service rgmanager start command returns immediately, but initializing the cluster and bringing up the Zimbra Collaboration Suite application for the cluster services on the active node may take some time. After all commands have been issued on both nodes, run clustat command on the active node, to verify that the cluster service has been started. When clustat shows all services are running on the active node, the cluster configuration is complete.

For the cluster service that is not running on the active node, run clusvcadm -d

[root@sahab-desktop]#clusvcadm -d mail1.example.com

This disables the service by stopping all associated Zimbra processes, releasing the service IP address, and unmounting the service’s SAN volumes.

To enable a disabled service, run clusvcadm -e

[root@sahab-desktop]#clusvcadm -e mail1.example.com -m node1.example.com

Testing the Cluster Set up

To perform a quick test to see if failover works:

1. Log in to the remote power switch and turn off the active node.

2. Run tail -f /var/log/messages on the standby node. You will observe the cluster becomes aware of the failed node, I/O fence it, and bring up the failed service on the standby node.

For Installing zimbra please follow click here

Wednesday, April 22, 2009

Resolving a Fatal error: Call to undefined function mysql_connect() in ubuntu

Details Steps given below

Symptoms

When the page is loaded in the web browser, you receive the error, Fatal error: Call to undefined function mysql_connect().

Fix

- Verify that your installation of PHP has been compiled with mysql support. Create a test web page containing

and load it in your browser. Search the page for MySQL. If you don't see it, you need to recompile PHP with MySQL support, or reinstall a PHP package that has it built-in. - Verify that the line to load the extension in

php.inihas been uncommented. In Linux, the line isextension=mysql.soand in Windows, the line isextension=php_mysql.dll. Uncomment the line by removing the semi-colon. You might also need to configure theextension_dirvariable.

#sudo apt-get install php5-mysql

#sduo apt-get install php5-cgi

4. Then restart the mysql and apache

#sudo /etc/init.d/apache2 restart

#sudo /etc/init.d/mysql restart